cross-posted from: https://lemmy.world/post/31184706

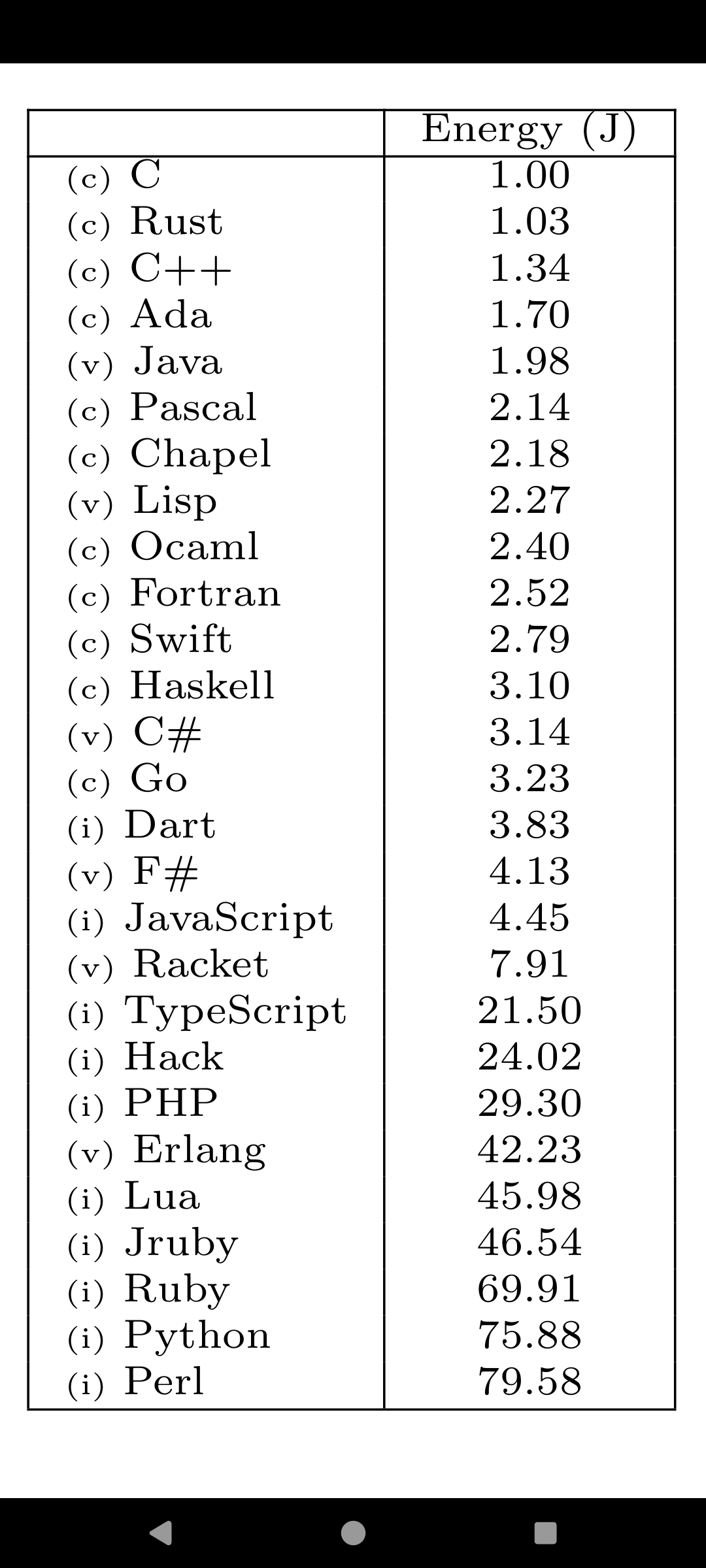

C is one of the top languages in terms of speed, memory and energy

https://www.threads.com/@engineerscodex/post/C9_R-uhvGbv?hl=en

If you want top speed, Fortran is faster than C.

To run perhaps. But what about the same metrics for debugging? How many hours do we spend debugging c/c++ issues?

True but it’s also a cock to write in

What if we make a new language that extends it and makes it fun to write? What if we call it c+=1?

This doesn’t account for all the comfort food the programmer will have to consume in order to keep themselves sane

Machine energy, definitely not programmer energy ;)

I would argue that because C is so hard to program in, even the claim to machine efficiency is arguable. Yes, if you have infinite time for implementation, then C is among the most efficient, but then the same applies to C++, Rust and Zig too, because with infinite time any artificial hurdle can be cleared by the programmer.

In practice however, programmers have limited time. That means they need to use the tools of the language to save themselves time. Languages with higher levels of abstraction make it easier, not harder, to reach high performance, assuming the abstractions don’t provide too much overhead. C++, Rust and Zig all apply in this domain.

An example is the situation where you need a hash map or B-Tree map to implement efficient lookups. The languages with higher abstraction give you reusable, high performance options. The C programmer will need to either roll his own, which may not be an option if time Is limited, or choose a lower-performance alternative.

I understand your point but come on, basic stuff has been implemented in a thousand libraries. There you go, a macro implementation

And how testable is that solution? Sure macros are helpful but testing and debugging them is a mess

You mean whether the library itself is testable? I have no idea, I didn’t write it, it’s stable and out there for years.

Whether the program is testable? Why wouldn’t it be. I could debug it just fine. Of course it’s not as easy as Go or Python but let’s not pretend it’s some arcane dark art

Yes I mean mocking, faking, et. al. Not this particular library but macros in general

The C programmer will need to either roll his own, which may not be an option if time Is limited, or choose a lower-performance alternative.

What are you talking about? https://docs.gtk.org/glib/data-structures.html

Well, let’s be real: many C programs don’t want to rely on Glib, and licensing (as the other reply mentioned) is only one reason. Glib is not exactly known for high performance, and is significantly slower than the alternatives supported by the other languages I mentioned.

OK, think of all the other C collection libraries there must be out there!

Which one should I pick then, that is both as fast as the std solutions in the other languages and as reusable for arbitrary use cases?

Because it sounds like your initial pick made you loose the machine efficiency argument and you can’t have it both ways.

For raw computation, yes. Most programs aren’t raw computation. They run in and out of memory a lot, or are tapping their feet while waiting 2ms for the SSD to get back to them. When we do have raw computation, it tends to be passed off to a C library, anyway, or else something that runs on a GPU.

We’re not going to significantly reduce datacenter energy use just by rewriting everything in C.

and in most cases that’s not good enough to justify choosing c

For those who don’t want to open threads, it’s a link to a paper on energy efficiency of programming languages.

Results

For Haskell to land that low on the list tells me they either couldn’t find a good Haskell programmer and/or weren’t using GHC.

Also the difference between TS and JS doesn’t make sense at first glance. 🤷♂️ I guess I need to read the research.

My first thought is perhaps the TS is not targeting ESNext so they’re getting hit with polyfills or something

It does, the “compiler” adds a bunch of extra garbage for extra safety that really does have an impact.

I thought the idea of TS is that it strongly types everything so that the JS interpreter doesn’t waste all of its time trying to figure out the best way to store a variable in RAM.

TS is compiled to JS, so the JS interpreter isn’t privy to the type information. TS is basically a robust static analysis tool

The code is ultimately ran in a JS interpreter. AFAIK TS transpiles into JS, there’s no TS specific interpreter. But such a huge difference is unexpected to me.

Its really not, have you noticed how an enum is transpiled? you end up with a function… a lot of other things follow the same pattern.

No they don’t. Enums are actually unique in being the only Typescript feature that requires code gen, and they consider that to have been a mistake.

In any case that’s not the cause of the difference here.

Only if you choose a lower language level as the target. Given these results I suspect the researchers had it output JS for something like ES5, meaning a bunch of polyfills for old browsers that they didn’t include in the JS-native implementation…

Not really, because this stuff also happens: https://stackoverflow.com/questions/20278095/enums-in-typescript-what-is-the-javascript-code-doing a function call always has an inpact.

Yeah sure, you found the one notorious TypeScript feature that actually emits code, but a) this feature is recommended against and not used much to my knowledge and, more importantly, b) you cannot tell me that you genuinely believe the use of TypeScript enums – which generate extra function calls for a very limited number of operations – will 5x the energy consumption of the entire program.

This isn’t true, there are other features that “emit code”, that includes: namespaces, decorators and some cases even async / await (when targeting ES5 or ES6).

I have a hard time believing Java is that high up. I’d place it around c#.

Why?

(A super slimmed down flavour of) Java runs on fucking simcards.

Because usually they use the super fat flavor of Java. Jabba Fatt tier of lardiness Java.

I’m using the fattest of java (Kotlin) on the fattest of frameworks (Spring boot) and it is still decently fast on a 5 year old raspberry pi. I can hit precise 50 μs timings with it.

Imagine doing it in fat python (as opposed to micropython) like all the hip kids.

Does the paper take into account the energy required to compile the code, the complexity of debugging and thus the required re-compilations after making small changes? Because IMHO that should all be part of the equation.

It’s a good question, but I think the amount of time spent compiling a language is going to be pretty tiny compared to the amount of time the application is running.

Still - “energy efficiency” may be the worst metric to use when choosing a language.